We live in a society driven by data. Recent surges in data collection technologies and the widespread adoption of sensor-based hardware have put more “data at the fingertips” than ever before. As a result, organizations – including public safety and government agencies – are becoming more and more data-driven.

We live in a society driven by data. Recent surges in data collection technologies and the widespread adoption of sensor-based hardware have put more “data at the fingertips” than ever before. As a result, organizations – including public safety and government agencies – are becoming more and more data-driven.

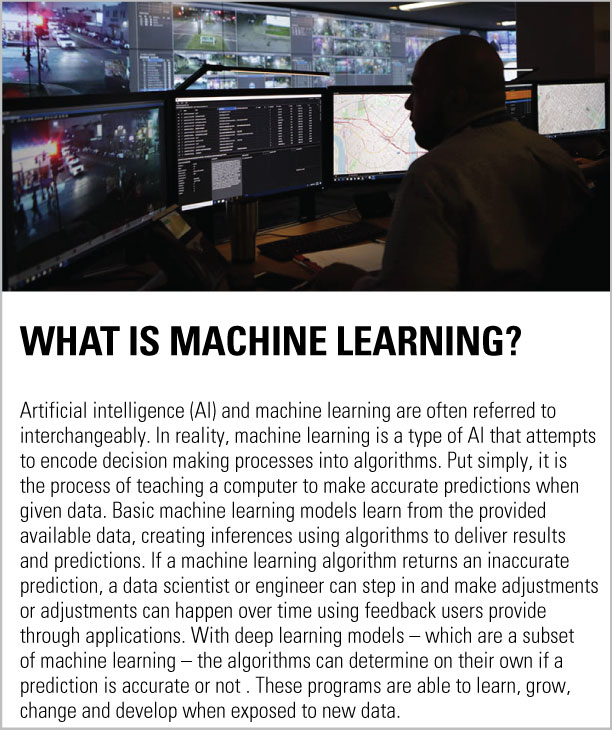

Today, intelligent cameras, sensors, agency personnel and even the general public are potential sources of public safety data. This extensive supply of data is the fuel driving artificial intelligence (AI) and machine learning. However, not all data is created equal. And while getting data isn’t a challenge – getting the right kind is.

The current belief is that the efficacy of AI is largely limited by the amount of available data to train algorithms on. If there is enough data, new AI systems can be introduced or existing systems can be improved. This mindset has led to the view of data as the “new oil” of the digital economy. In truth, poor data quality is the biggest barrier to widespread, profitable AI and machine learning.

Like technologies that have come before, the true value of AI and machine learning lies in the quality of data provided – clean, tagged, reliable and consistent. No matter how good an AI model is, if the data going into the model is poor, the model will be unable to provide accurate, actionable insights – which is key in today’s mission-critical public safety environment. For machine learning-powered applications in particular, the raw data is often not in a form that is suitable for learning. Features identified and extracted from the raw data tend to be domain-specific and would benefit from additional expertise provided by subject matter experts. In essence – for AI and machine learning – “garbage in, garbage out.”

Unfortunately, less than three percent of data meets basic quality standards, with 47 percent of new records containing a critical error. While organizations believe they are sitting on a gold mine of data, many fail to realize that they are, in reality, making critical business decisions using bad data – containing missing fields, wrong formatting, duplicates or irrelevant information. Within the public service industry, 78 percent of agencies are basing critical decisions on data that can’t always be verified – a costly practice in an economy where the estimated annual cost of bad data is a whopping $3.6 trillion.

So what can public safety organizations do?

Defining and assessing data quality is a difficult task, particularly as data is captured in one context and used in totally different environments across the public safety workflow. More specifically, data quality assessment is domain-specific, less objective and generally requires significant human involvement.

For public safety “mission-critical data” there is a unique set of requirements that dictate adherence to federal Criminal Justice Information Services (CJIS), state and local standards for security and privacy of law enforcement-provided data. In addition, there is an abundance of new data sources whose potential has not been yet fully leveraged. Smart city sensors, wearable devices, car sensors revealing driving patterns, environmental sensors, and user-generated content in social media are only a part of a new rapidly evolving data ecosystem waiting to be explored. With all of these data sources, one of the biggest challenges for public safety is lack of data discoverability and – more specifically – the lack of a common structure and semantic model for data that bears the same information type and comes from similar sources.

In order to ensure critical, timely and accurate information can be provided and produced by AI and machine learning, the data must be correct – properly labeled and cleaned, with sufficient accuracy – cover the entire range of inputs, and be examined for bias. Data that meets these standards is required to reduce bias in predictive models. Data quality and understanding is also a pivotal part of “Explainable AI”, which enables our users to understand, appropriately trust and effectively manage the emerging generation of artificially intelligent partners – a key driver for public safety.

Improved data collection methods and a solid process for data management is important. In order to collect good data, companies need to incentivize users. Products should be designed in a way that makes it easy for users to contribute their data, which in turn feeds back into improvements of the underlying models that power their applications. Good usability and user experience will further encourage individuals to contribute valuable information. In addition, public safety agencies need to look at their data – scrutinizing data patterns for anomalies. AI and machine learning technologies should be monitored on an ongoing basis to understand, detect and resolve potential errors or biases.

Ultimately, ensuring the collection and input of high-quality data is a foundational step towards fully embracing and engaging with AI and machine learning.

Jeanne Weed

November 30, 2018

Very interesting article. Seems like bad data is worse than no data at all? These stats are alarming but also leave things wide open for improvement: “Within the public service industry, 78 percent of agencies are basing critical decisions on data that can’t always be verified – a costly practice in an economy where the estimated annual cost of bad data is a whopping $3.6 trillion.”